环境搭建&数据准备

(已有:conda 4.9.2)

创建新的虚拟环境deephageTP

1 | conda create --name deephageTP python=3.6 numpy theano keras scikit-learn |

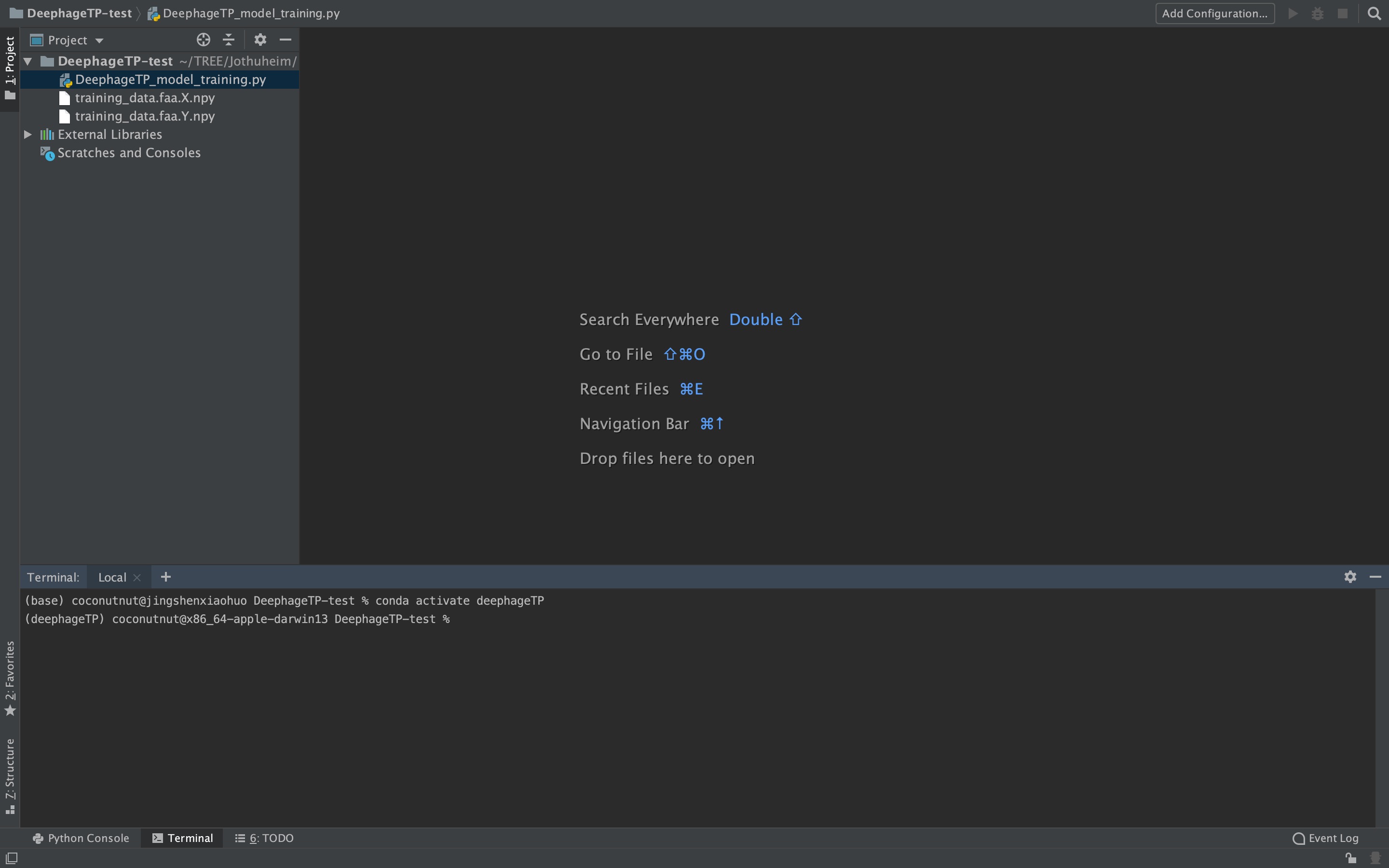

新建工程文件夹DeephageTP-test(需要的文件从下载的DeephageTP-master拷)

PyCharm打开DeephageTP-test,激活环境

1 | conda activate deephageTP |

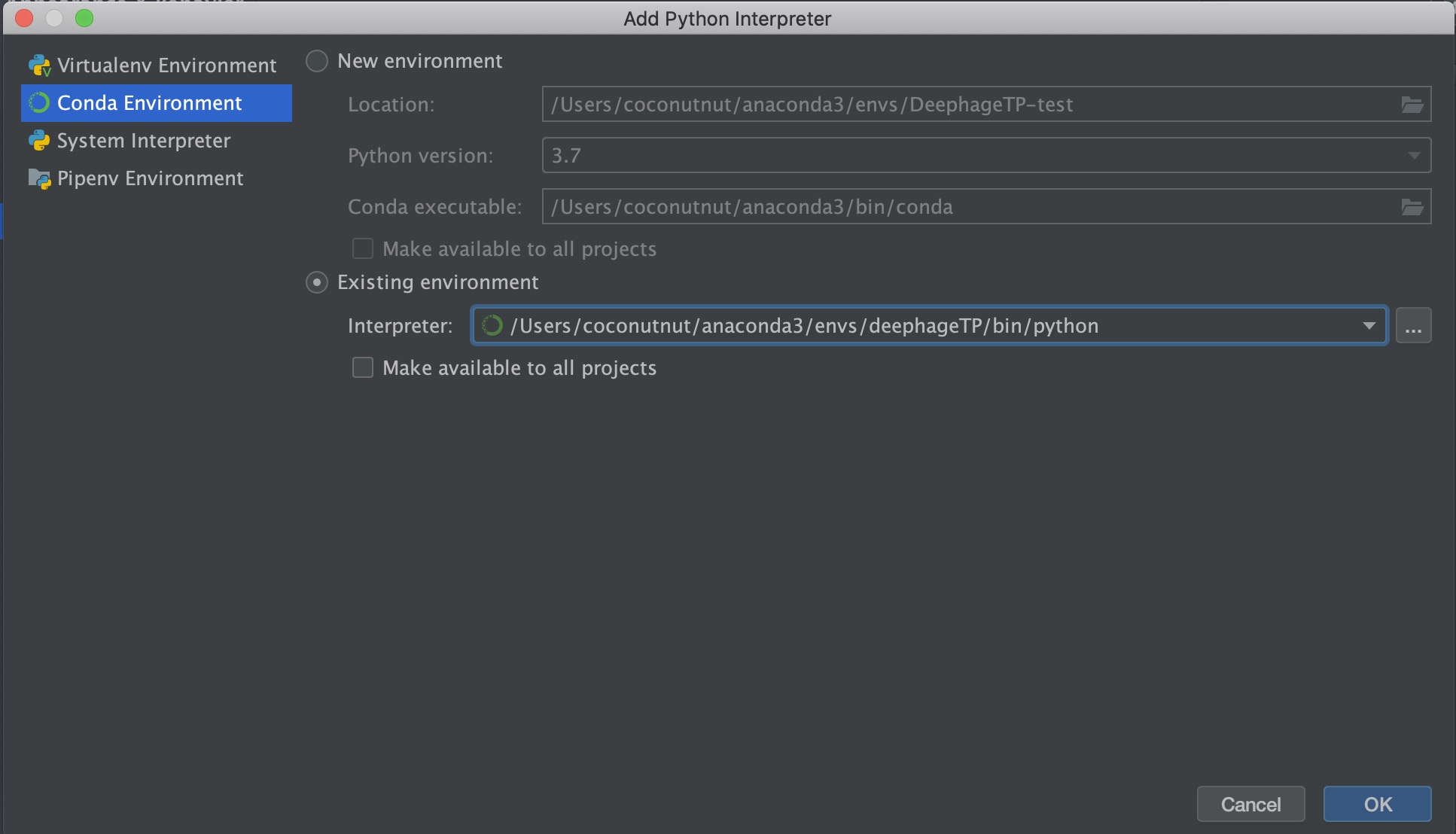

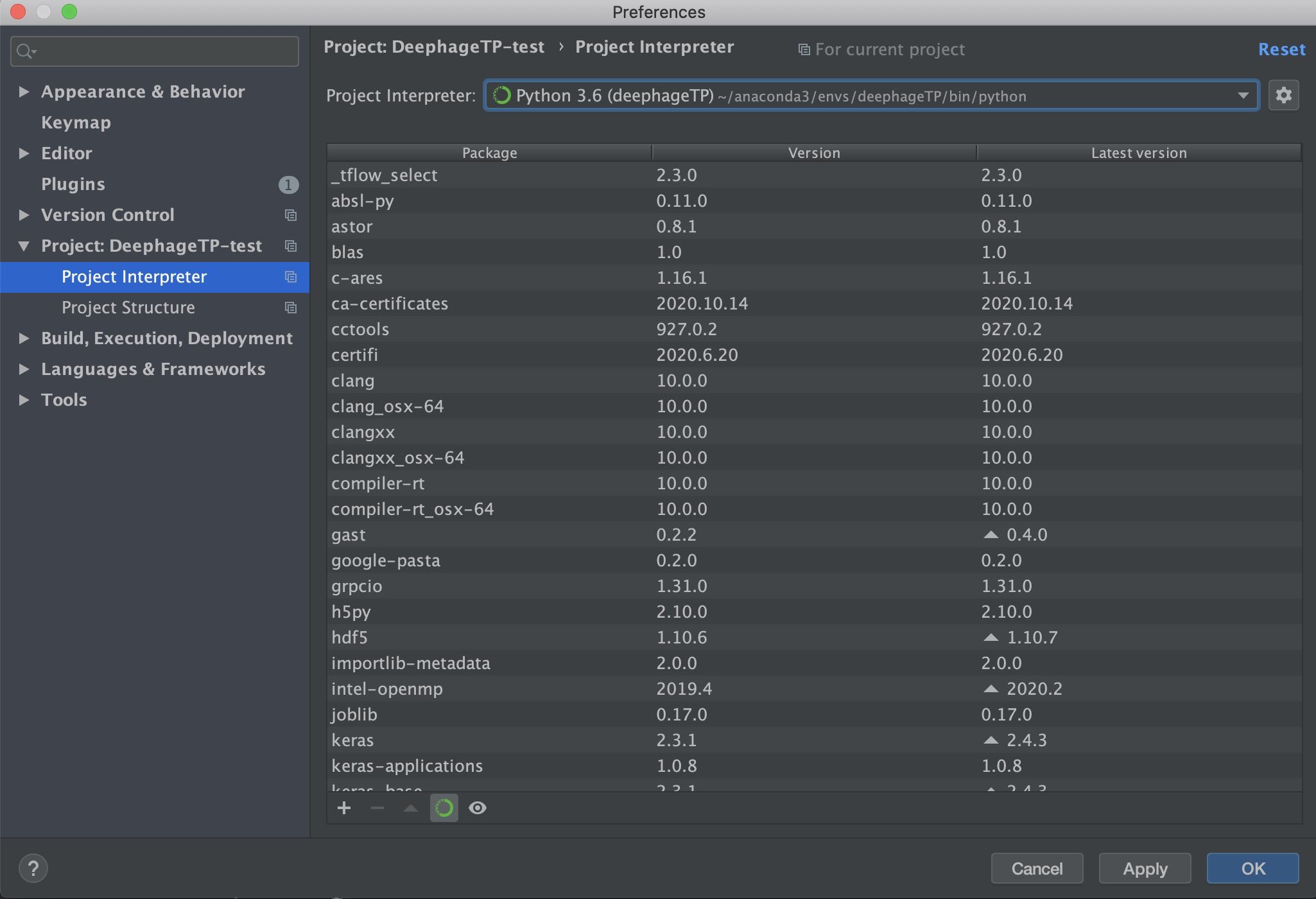

Python Interpreter也改成deephageTP

测试example_data.fa

代码里面直接读的training_data.faa,X和Y应该在同一个文件里,

但是给的training_data.faa.X.npy.tar.gz、training_data.faa.Y.npy.tar.gz是在不同文件

于是先用example_data.fa试一下(遇到bug #1,解决见Debug)

1 | (deephageTP) coconutnut@x86_64-apple-darwin13 DeephageTP-test % python DeephageTP_model_training.py |

产生了3个文件

example_data.fa.all.h5

example_data.fa.X.npy

example_data.fa.Y.npy

所以给的training_data.faa.X.npy.tar.gz、training_data.faa.Y.npy.tar.gz也是生成的吗?data文件夹里就一个叫a的空文件,难道是没传training_data.faa?😧

看了下代码,还真是🙄

aa_ref2npy()用来转格式,存成one-hot编码后,X和Y分开的形式

DL_Train()用来训练

既然如此,直接用training_data.faa.X.npy.tar.gz、training_data.faa.Y.npy.tar.gz解压,不跑aa_ref2npy(),就可以直接训练了

测试training_data.faa

解压training_data.faa.X.npy.tar.gz、training_data.faa.Y.npy.tar.gz

(25.3MB解压后3.97 GB,是得有多少0啊,不愧是one-hot)

代码改了2处:

第8行

1 | from sklearn.cross_validation import train_test_split |

改成

1 | from sklearn.model_selection import train_test_split |

最后的

1 | if 1: |

注释掉

运行

1 | (deephageTP) coconutnut@x86_64-apple-darwin13 DeephageTP-test % python DeephageTP_model_training.py |

太大了,不跑了

代码分析

训练部分 DeephageTP_model_training.py

aa_ref2npy()

做one-hot编码用的

把蛋白质序列↓

1 | >UniRef100_A0A017QK57 PBSX family phage terminase large subunit n=1 Tax=Glaesserella parasuis str. Nagasaki TaxID=1117322 RepID=A0A017QK57_HAEPR 1 |

one-hot编码,np.array格式,保存(分别存X和Y)

把X打出来看下

1 | [[0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], 对应M |

就是和蛋白质序列MKI…一一对应的one-hot编码(还是手动写死的)

大小是(900, 20),和文中描述一样

这里Y是0,根据每个蛋白质第一行

1 | >UniRef100_A0A017QK57 PBSX family phage terminase large subunit n=1 Tax=Glaesserella parasuis str. Nagasaki TaxID=1117322 RepID=A0A017QK57_HAEPR 1 |

最后这个数字-1表示类别

如下面这个蛋白的类别是2

1 | >UniRef100_A0A072NPV4 Phage terminase, small subunit n=1 Tax=Bacillus azotoformans MEV2011 TaxID=1348973 RepID=A0A072NPV4_BACAZ 3 |

DL_Train()

1 | def DL_Train(ref_Data, len_w): |

Debug

#1

第一次跑DeephageTP_model_training.py时遇到的bug

1 | ModuleNotFoundError: No module named 'sklearn.cross_validation' |

解决:

https://blog.csdn.net/qq_35962520/article/details/85295228

1 | # from sklearn.cross_validation import train_test_split # cross_validation不再使用,移至model_selection |